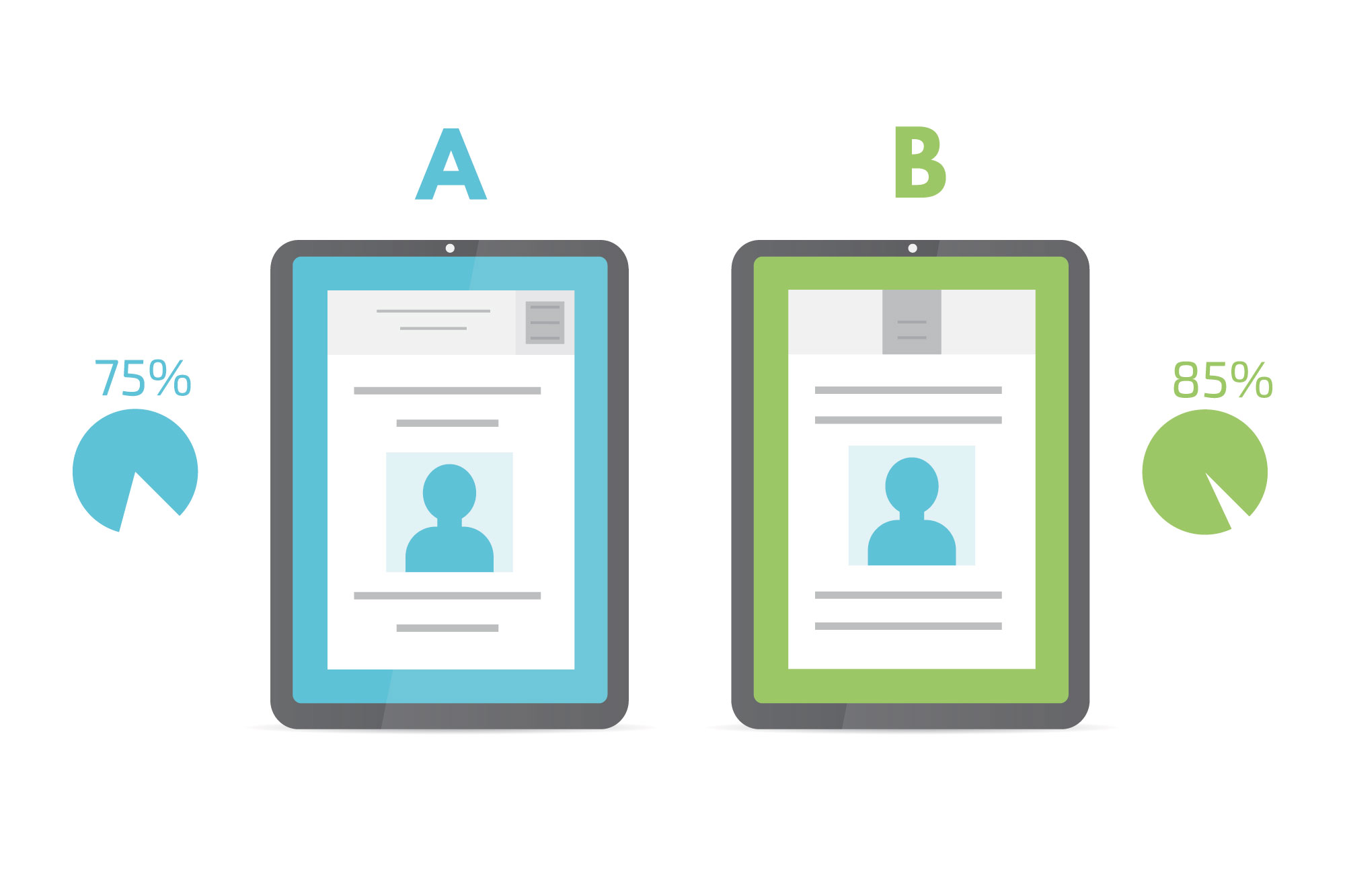

A/B testing is an experimentation process that allows researchers and designers to determine the best performing version(A vs. B) of a text, image, page, and user flow.

Are you looking to optimize the performance of your product or website through experimentation? If so, A/B testing is a must-have in your UX Research toolkit. In this article, you will learn about:

- The concept of A/B testing

- Importance of A/B tests

- Conducting an A/B test

- Effectively presenting your A/B test findings to your team

What is A/B Testing?

A/B testing, also known as split testing or bucket testing, is a method of comparing at least two versions of a product or feature to see which performs better. It involves randomly dividing users into two or more groups and showing them different versions of the product or feature.

The concept of A/B Testing can be traced back to 100 years ago. During the 1920s, a scientist named Ronald Fisher figured out the basic scientific principles of A/B testing. Although Fisher’s research used A/B testing for crop growth analysis, A/B testing today is largely used in the tech and marketing industries.

Why use A/B testing?

A/B Tests can answer questions like:

- Which call-to-action drives more users to sign up?

- What is the click rate of the two versions of the button?

- Where should we place our CTA?

- Is our design inclusive (e.g. for the colorblind or elderly)?

With A/B testing, you can reduce risks, optimize profit, and maximize your organization’s resources through user-centered design decisions, even before launching the live product. A/B tests are also suitable for rapid testing of your products because of their straight-forward method.

What’s the process of A/B Testing?

To conduct an A/B test, you will need to follow these steps:

- State the problem: Clearly define what you want to solve in your product. For example, you might want to increase the conversion rate of a website or app, or improve the retention rate of a mobile game.

- Determine the element or feature to be tested: Identify the element or feature that you want to test, such as a website design, app feature, or email campaign. Make sure to choose an element or feature that is likely to have a significant impact on the problem you want to solve.

- Create the versions: Create two or more versions of the element or feature that you want to test. Make sure to keep all elements of the test as similar as possible, except for the element or feature being tested. For example, if you are testing a website design, keep the content and functionality the same, but change the design elements that you want to test.

- Set up the test: Divide users into different groups and show them the different versions of the element or feature.

- Deploy the test: Run the A/B test for a sufficient amount of time to capture meaningful data. The duration will depend on the goals of the test and the size of the sample.

Avoid making changes to other elements of the product or website while the test is running, as this can affect the results. - Analyze the results: Once the test is complete, analyze the results by comparing the performance of the different versions using the metric you selected. For tests with larger sample sizes, you may need to do statistical analysis. Tools like Optimizely and Stat Trek can help you determine the right sample size and the statistical significance of your results.

- Make a decision: If one version outperformed the other, you should consider discussing its implementation with your team.

That seems like a lot of work for budding organizations, right? Thankfully, we have tools today that can fast track your A/B testing process. With UXArmy’s Remote Unmoderated Usability Testing, you can invite participants, conduct tests, and analyze your results – all in one platform. Visit UXArmy’s website to learn how to create an A/B test for images and navigation flows with UXArmy. Try UXArmy for free today!

Presenting Your Findings to Your Team

- Start off with a background of your research. Present your objectives, hypotheses, and the relevance of A/B testing in answering your research questions.

- Give your audience a bit of information about your test period. Tell them how long it took you to design the experiment, recruit participants, conduct the tests, and analyze the results.

- Backup your insights with numbers. If applicable, make sure to discuss the conversion rates, click rates, page visitors, and significance level of the test.

- Substantiate quantitative data with relevant analysis. Case in point: you’re making a data dashboard for a client that caters to the Asian market. You may notice that a lot of test participants in India would prefer bar graphs with colors and patterns as compared to those with plain colors only. A relevant analysis for this could be because India has the world’s largest colorblind population and the addition of patterns fill in the accessibility gaps in the design for many people in this country.

Image on the right shows the color-blind friendly version of the graph. Source: Smashing Magazine - Be straightforward with your conclusion and recommendations. Make sure to integrate your hypotheses and objectives with your final words. Tell your teammates the things that must be done: e.g. Do we need to retain the current page structure? Should we change the colors of the buttons? Which logo should we use for the brand?

A/B testing is a way to compare two different versions of something to see which one is better. There are other ways to do this too, like comparing lots of things at the same time, asking people which one they like more, and trying different things with different groups of people.

Some examples of tests similar to A/B testing include:

Multivariate testing: This method involves testing multiple variables simultaneously in order to understand the relationships between different variables and identify the most effective combinations.

Preference testing: This method involves collecting data on which version of a product or service is preferred by a group of users. This can be conducted through a variety of methods, including surveys, interviews, focus groups, or online polls.

In conclusion, A/B testing is a powerful tool for optimizing the performance of websites, products, or other systems by getting input from users. By carefully designing and executing A/B tests, and effectively presenting the findings to your team, you can make informed decisions that will drive business growth. Furthermore, it is crucial to determine the appropriate type of test by assessing the objectives of the research as well as the resources available within the organization.

It’s important to note that A/B testing is a continuous process, and you should regularly test and optimize your products and features to ensure that they are meeting the evolving needs and preferences of your users. With that, you can stay ahead of the competition.