UX Research at a Crossroads

UX research didn’t arrive with trumpets; it arrived with headphones, notebooks, and awkward silence-the kind that tells you there’s something important hiding beneath a user’s words. For a while, that quiet work became a loud differentiator. As digital products matured and competition intensified, teams learned the hard way that guessing is expensive. They hired researchers, built labs, and added the language of “insights” to their strategy decks.

Fast-forward to 2025 and the ground feels unsteady again. The tech industry is grappling with a new calculus: ship faster, spend less, show impact. At the same time, AI tools can transcribe interviews, cluster themes, and spit out a passable “summary” in minutes. In that atmosphere, it’s not surprising that some leaders have started saying the thing that stings: Why do we need researchers at all?

Designer and career strategist Sarah Doody captured this mood in a widely shared post, quoting a line she’s heard in real conversations: “We don’t need researchers anymore… we can use AI synthesis for everything.” Not because it’s true, but because it’s become a common provocation. If you practice research, you can feel the temperature shift when a remark like that lands. It puts the profession on the defensive.

And yet, the picture is not as simple as a decline narrative. Teams that compete on experience-financial apps that must feel safe, healthcare tools that must feel humane, AI-powered products that must feel controllable-have doubled down on understanding people, not just patterns. What’s changing isn’t the need for human insight; it’s the shape of the researcher’s job, the clock speed of discovery, and the expectations for visible business impact.

This article is a clear-eyed tour of the new landscape: what’s threatening UX research roles, what’s merely noise, and how practitioners can adapt without losing the soul of their work.

Ready to build that trust and kickstart your research?

let’s make trust the foundation of every project you work on.

Challenge #1: The “AI Will Replace Researchers” Narrative

Every researcher has heard a version of it: Why spend weeks interviewing, synthesizing, and writing when a tool can analyze transcripts and cluster responses in seconds? The narrative is seductive because it rides on real progress. Models can summarize. They can propose themes. They can even generate “personas” that read reasonably well at first glance.

But fast synthesis is not the same as sound judgment. It doesn’t know which contradictions matter. It doesn’t understand power dynamics in a meeting, or the way a participant’s eyes dart to a spouse off camera before answering a “simple” question about money. AI can accelerate parts of the workflow; it can’t decide what’s ethically right, strategically relevant, or emotionally resonant.

That’s why prominent practitioners keep reframing the story. Nikki Anderson is blunt about the boundary: “AI can’t replace your expertise, but it can take the tedious tasks off your plate.” And Eniola Abioye adds the posture researchers should take into the room: “AI isn’t here to replace you… think of AI as your research co-pilot.” The message from the field is consistent: use the speed; keep the steering wheel.

The job, then, is not to deny what machines can do. It’s to decide what they should do in your practice, and to show stakeholders that a thin layer of AI-authored bullet points is not the same as evidence-backed, context-rich understanding.

Challenge #2: Productivity Metrics vs. Human Understanding

In tough markets, companies worship at the altar of productivity. Ship counts, lines of code, experiments launched-metrics of motion can eclipse metrics of meaning. Research gets framed as a cost, or worse, as the thing “slowing us down.”

But rushing past human reality is a strange way to save time. Teams that skip discovery often end up building the wrong thing faster: the feature nobody wanted; the AI assistant that creeps people out; the onboarding that looks beautiful and quietly bleeds trust. Those mistakes are invisible on the roadmap until they become very visible in churn, support volume, and brand damage.

Practitioners feel that squeeze. In community threads, you see the frustration boil over: people assume they can paste transcripts into a chatbot and call it insight, with no regard for sample bias, data quality, or context. As one discussion summarized, without human oversight you can’t even tell when a model’s “insight” is simply wrong.

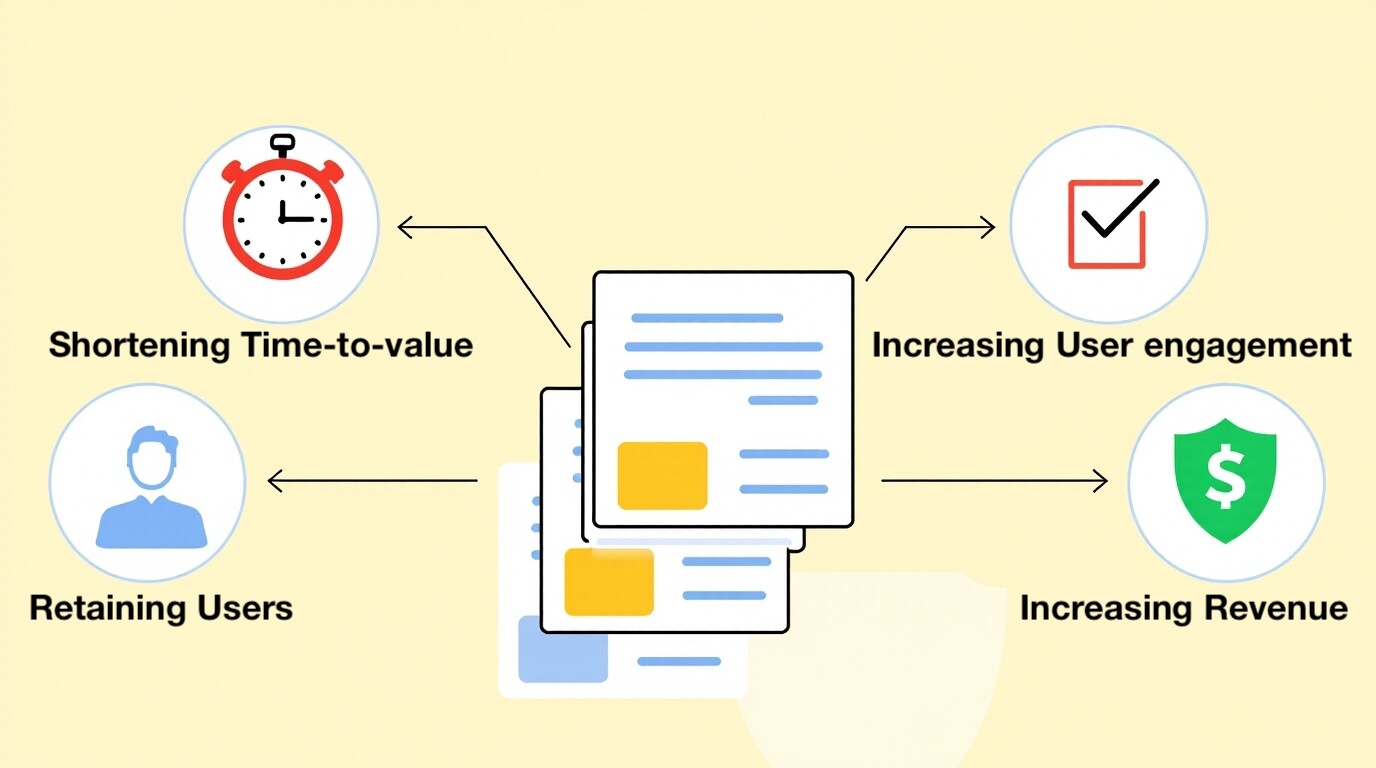

If you want senior leaders to treat research as a multiplier and not a drag, speak their language. Don’t stop at “users are confused.” Say: “this friction is driving 18% of support contacts,” or “this misunderstanding is killing trial-to-paid conversion.” Tie your evidence to time-to-value, activation, retention, revenue protection. The point is not to abandon craft for KPIs-it’s to connect craft to KPIs so your partners see the cost of skipping it.

Challenge #3: Shrinking or Restructured Research Teams

Some organizations really are cutting back. The work still needs doing, so it gets pushed to designers, PMs, and sometimes support. “Research democratization” can be healthy-more people meeting real users is good-but it can also produce shallow, confirmatory studies that exist to bless a pre-chosen direction.

Contrast that with companies operating at real scale or in high-risk domains. They still invest in dedicated researchers because they know how quickly surface-level “insights” fall apart under load. Those teams need people who can move from usability triage to strategy, who can critique an AI roadmap with an ethics lens, who can influence product direction instead of rubber-stamping it.

A recent UXPA perspective put it plainly: “AI tools are increasingly adept at automating repetitive tasks and surfacing patterns. But what they can’t do… is navigate the political, emotional, and strategic dimensions of product development.” The more your role lives in those dimensions, the harder it is to automate-and the more valuable you become when budgets tighten.

If your org is restructuring, the pivot is from service provider to partner. Don’t wait for intake forms. Join planning conversations. Offer options and trade-offs. Co-own success metrics. When research moves upstream, it rarely gets cut first.

Challenge #4: Misunderstood Value in AI-Driven Organizations

Data-rich companies can start to believe they already know everything. Dashboards hum. LLMs summarize tickets. Experiment platforms churn out p-values. So why, a VP might ask, do we need interviews at all?

Because data shows what happened. It rarely shows why. Because a 3% lift might hide a 30% trust issue in a critical segment. Because there’s a canyon between “most people clicked continue” and “the way we ask for permissions makes new parents feel judged.”

This is where researchers earn their keep as sense-makers. The work is not to worship or reject AI, but to situate it-what it’s good for, where it’s blind, how to interpret it. Sarah Doody’s caution about “AI synthesis for everything” is really a call to maintain standards: evidence should be traceable, representative, and humanly meaningful before it’s used to steer a roadmap.

In AI-heavy teams, another layer of value emerges: responsible design. Who gets excluded by automated decisions? What failure modes hurt the most vulnerable? What interactions make the system feel manipulative? Those are research questions as much as technical ones, and they don’t answer themselves.

Challenge #5: Balancing Speed with Depth

If your calendar looks like a game of Tetris, you’re not alone. Two-week sprints and quarterly re-prioritization have trained orgs to expect insight on demand. Some researchers respond by slimming everything down; some refuse to budge and get sidelined. The professional move is to layer.

You keep a pulse – a small, steady cadence of user contact that keeps the team grounded and feeds refinement decisions. And you protect depth – discrete windows of rigorous discovery for the big bets: new segments, new markets, new AI-driven capabilities. AI can help here in practical ways: transcribing, sketching initial clusters, even simulating stakeholder pushback so your narrative is tight before you present.

The trick is to let AI boost your speed without letting it tempt you into false certainty. As Helio put it in a succinct post: “AI should support, not replace, UX researchers.” Support means using the machine to widen options and compress busywork while you protect the standards that keep outcomes honest.

Challenge #6: The Emotional and Career Anxiety of UX Researchers

There’s a quieter story behind all of this: the toll on people. It’s hard to practice curiosity in a culture of fear. It’s hard to advocate for nuance when budgets are tight and the loudest metric is “velocity.” It’s hard to hear that your craft-the one you spent years developing-might be a commodity.

Many researchers are responding by widening their skills. Some are learning analytics to speak more fluently with data teams. Some are getting better at facilitation, because a well-run working session can change a roadmap in an afternoon. Some are leaning into evidence storytelling, pairing a single, unforgettable user moment with hard numbers so leaders can feel and measure the stakes.

If you’re feeling that anxiety, you’re not alone. But it’s worth remembering that every previous technology wave (automation, cloud, mobile, growth experimentation) triggered similar headlines. The roles that survived were the ones that absorbed the new tools and stayed stubborn about the parts of the craft that make the work humane.

Why Human Insights Won’t Disappear

Empathy is not a buzzword when you’re sitting in the living room of a family juggling bills, or watching a nurse work around a glitch to save time for a patient. Those scenes carry implications that don’t fit neatly into a cluster label. They change what “value” means. They change what “friction” means. They change what “success” means.

Cultural nuance is the other big reason human insight persists. An interaction that feels friendly in one country can feel flippant in another. A “clever” empty state that helps a power user might patronize a novice. Trust patterns are local and learned; someone has to go learn them.

Finally, there’s the matter of influence. Executives don’t move because a dashboard twitched. They move when someone puts an undeniable human truth on the table-paired with a feasible path to capitalize on it or fix it. Good researchers are translators. They take the mess of reality and make it actionable without sanding off the edges that matter.

A succinct line from UXPA captures what keeps this work human: “AI tools are adept at automating repetitive tasks and surfacing patterns… [but] they can’t navigate the political, emotional, and strategic dimensions of product development.” Tools help. Judgment leads.

Field Notes: When Research Changes the Problem (and When It Should Get Out of the Way)

To keep this grounded, two quick stories.

A fintech team was convinced their onboarding drop-off was about UI complexity. After five short conversations with new parents-done remotely, on phones with kids in the room-they realized the real blocker was fear: people didn’t feel safe connecting bank accounts before they understood why the app was asking. The fix wasn’t a faster flow; it was a staged trust pattern: a preview mode, a “why we ask” explainer in plain language, and screenshots of what would and wouldn’t be shared. Conversion followed. Not because the flow got shorter, but because the emotion got addressed.

A SaaS pricing team, by contrast, didn’t need a month of interviews to decide between two page layouts. They needed experiments and a few well-chosen copy tests. The problem was bounded, reversible, and easily measurable. Research’s role was to step back and let testing do its job.

The lesson is not that one method is better. It’s that experienced teams know which hill they’re on-and when to bring empathy to bear versus letting numbers carry the day.

Action Plan: How UX Researchers Can Stay Future-Proof

Here’s the frank playbook many strong teams are running (and that individual researchers can adopt, even if the org is still catching up).

1) Put AI to work deliberately.

Use it like a force multiplier, not a crutch. Let it transcribe, draft, and cluster. Ask it to propose counter-arguments to your findings so you can steel-man before you present. But always route outputs through human review. If you can’t trace a claim to evidence, don’t ship it.

2) Speak impact, not just insight.

Tie your work to adoption curves, activation milestones, trial-to-paid conversion, support deflection, risk mitigation. If your organization cares about a North Star metric, make sure your research projects are explicitly connected to moving it or protecting it. Frame trade-offs in business terms, not just UX terms.

3) Change rooms, not just slides.

Don’t only deliver decks-facilitate decisions. Co-create options with PMs and engineers. Bring a sketch to the meeting, not a lecture. Walk in with two or three viable paths, the evidence behind each, and a recommendation. Decisions made with you are stickier than decisions handed to you.

4) Protect depth where it matters most.

Not every question needs diary studies; some do. Reserve deep research for irreversible bets, sensitive domains (health, finance, safety), and AI features with real downside risk. Make a wall chart of “When we go deep vs. when we go fast” and get leadership to approve it. That alignment will save you from weekly debates.

5) Build a lightweight insight spine.

Whatever tool you use, keep evidence searchable and traceable. Tag clips to claims. Annotate charts with the moment that inspired the test. Rotate observers through sessions so the burden of proof isn’t yours alone.

6) Keep the human edge sharp.

Double-down on the skills machines can’t absorb: facilitation that diffuses tension; synthesis that respects contradiction; ethical instincts that keep harm out of the backlog; storytelling that travels.

Where does a research platform help? This is the right place to talk about tools because they either amplify those habits or get in the way. UXArmy has been evolving its research platform with that philosophy: auto-tagging to shave hours off synthesis, AI interview summaries that make first-pass analysis fast but traceable, and on-the-fly translations so cross-regional teams can hear users without waiting on vendors. The intent is not to replace the researcher; it’s to clear the runway so researchers spend most of their time where they’re irreplaceable-on judgment, empathy, and influence.

When you combine disciplined habits with thoughtful tooling, you get a practice that moves at the speed of modern product work without ceding its standards.

Conclusion: Research Under Pressure-But Still Indispensable

The threats are real. Budgets are tight. AI is loud. Some orgs are trimming what they don’t immediately understand. But if you zoom out, the arc looks familiar. Every leap in capability forces a leap in craft. The professionals who survive aren’t the ones who ignore the new tools; they’re the ones who absorb them and keep insisting on the parts that make the work humane.

Here onwards, UX research is less about rituals and more about results. It’s less about holding the mic and more about shaping the room. It’s less about defending a role and more about owning outcomes alongside product and engineering.

So no-the job isn’t vanishing. It is changing. If you treat AI as a co-pilot, connect your evidence to business value, and protect the uniquely human edges of your craft, you won’t just keep your seat at the table. You’ll set the agenda for what the table talks about next.

Thought, Comments or Questions? Feel free to shoot us an email at hi@uxarmy.com

Experience the power of UXArmy

Join countless professionals in simplifying your user research process and delivering results that matter

Frequently asked questions

Are UX researchers being replaced by AI?

No. AI can automate repetitive parts of the workflow, but not the judgment, ethics, and context that make research valuable. As Nikki Anderson puts it, AI can remove tedium; it can’t replace expertise.

How can teams overcome participant recruitment challenges?

Recruiting the right users is time-consuming and often constrained by availability and budget. Solutions include using panel recruitment, user testing platforms, or remote usability testing tools to widen your pool and ensure diversity.

Why are some companies cutting UX research roles?

Cost pressure, productivity optics, and the mistaken belief that analytics or AI summaries can replace discovery. Those cuts often backfire when teams ship faster but learn slower.

What causes user research to be undervalued inside organisations?

Many leaders view UX research as slow and hard to measure—unlike metrics in marketing or engineering. This perception leads to budget cuts or sidelining of research. Demonstrating ROI through case studies, usability testing tools, and metrics like task success rates can shift perceptions.

What are the 12 steps in the research process?

The research process involves identifying a problem, conducting a literature review, formulating a hypothesis and objectives, designing the study, collecting and analyzing data, testing hypotheses, and finally, preparing a report of the findings. While specific steps vary slightly by field, these core activities form a structured approach to answering a research question or solving a problem.

What are the 5 main steps of research?

Five Broad Stages of Research

1.The Exploratory Stage. This is the first stage of the process. …

2.The Narrowing Down Stage. The researcher has done enough groundwork in the 3.exploratory stage to identify the tentative research subject. …

4.The Revisiting Stage. …

5.The Writing Stage. …

6.The Review Stage.

What unique value do UX researchers provide that AI can’t?

Understanding why people behave the way they do; navigating organizational politics; designing responsibly in sensitive domains; telling evidence-backed stories that move leaders to act. As UXPA notes, those political, emotional, and strategic dimensions are exactly where human researchers excel.

How can UX researchers show business impact?

Map findings to adoption, activation, retention, revenue, and risk. Replace vague statements with quantified opportunities and concrete trade-offs. Show the cost of not knowing.

Do leading companies still invest in UX research?

Yes-especially where trust, safety, and differentiation matter. The names vary by industry, but the common thread is simple: when the stakes are high, guessing is expensive.

How should researchers use AI without losing rigor?

Use AI to accelerate transcription, drafting, clustering, and rehearsal. Then verify, contextualize, and decide. Or, as Eniola Abioye says, treat it like your research co-pilot-not the pilot.